Endeavor Physical Therapy

Endeavor Physical Therapy is an outpatient physical and hand therapy clinic that provides premier physical therapy intervention to patients suffering from musculoskeletal injuries pre-operatively or postoperatively. We provide treatment to the entire body system, from shoulders to the spine, hips, knees, elbows, hands, and ankles, and services for a wide range of musculoskeletal disorders and orthopedic injuries and conditions, specifically tailored for you.

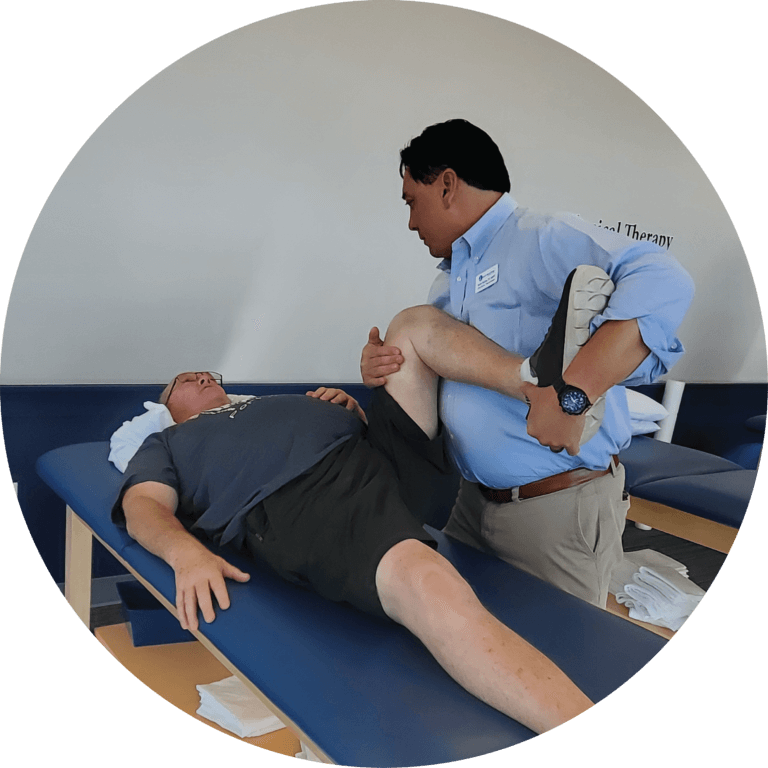

From joint mobilization, therapeutic modalities such as dry needling, and functional exercise, our physical therapists provide both manual and non-manual therapy to target the problem and deliver custom-tailored rehabilitation programs that get real results. Our goal is to maximize your mobility, and function and improve your overall quality of life. As an owner-operated clinic, we are able to make our patients' needs our top priority.

Our Locations

South Austin

3809 S 2nd St

Suite D100

Austin, TX 78704

Southwest Austin

3100 W. Slaughter Ln

Suite 101

Austin, TX 78748

Northwest Austin, Four Points

6911 Ranch Road 620 North

Suite B-200

Austin, TX 78732

North Central Austin

1033 La Posada Drive

Suite 230

Austin, TX 78752

Anderson Lane

3202 W. Anderson Lane

Suite 202

Austin, Texas 78757

Hutto

210 Ed Schmidt Hwy

Suite 250

Hutto, TX 78634

Domain

12001 N. Burnet Rd

Suite G

Austin, TX 78758

Cedar Park

1120 Cottonwood Creek Trail

Bldg B, Ste 220

Cedar Park, TX 78613

Kyle

920 Kohlers Crossing

Bldg E, Ste 550

Kyle, TX 78640

Manor

11300 Hwy 290 E

Bldg 1, Ste 140

Manor, TX 78653

Pflugerville

1601 E. Pflugerville Parkway

Bldg 1, Ste 1202

Pflugerville, TX 78660

San Marcos

151 Stagecoach Trail

Suite 103

San Marcos, TX 78666

Round Rock East

896 Summit St.

Suite 102

Austin, TX 78664

Round Rock West

16020 Park Valley Dr.

Austin, TX 78681

Bee Cave

3944 Ranch Road 620 S

Bldg 8, Ste 203

Bee Cave, TX 78738

Waco

1200 Richland Dr Ste G

Waco, TX 76710

(formerly Bosque River Physical Therapy)

Sun City

4847 Williams Dr

Suite 103

Georgetown, TX 78633

Georgetown Physical Therapy

3415 Williams Drive

Suite 145

Georgetown, TX 78628

Services